Earlier this year the phrase “vibe coding” was coined to describe using purely conversational chat to code with AI. It had felt like a new world was unlocked - a world in which the user did not need to understand the codebase of what they were building. Building an app was no longer a miracle, but a common occurrence - a full stack app that would have taken a month built in ten minutes! In this fable of the turtle and the hare, it seemed like the hare always won.

Why does it feel cheap?

However, it's one thing to make something quickly that works and another to make a truly great piece of software. Under the pressure of running out of tokens, users are expected to one-shot prompts - but novel software creation is not a process that can be done in a single paragraph of text, it requires constant iteration. AI tools are not infallible, as you construct your software house through pure vibes instead of understanding, you’re essentially creating a roof with holes. Suddenly, edge states don’t work, the interface breaks on small screens, and random components have extra spacing1. As a result, the open source design system, although a major evolution in accessibility and usability from years ago, becomes equated with cheapness.2

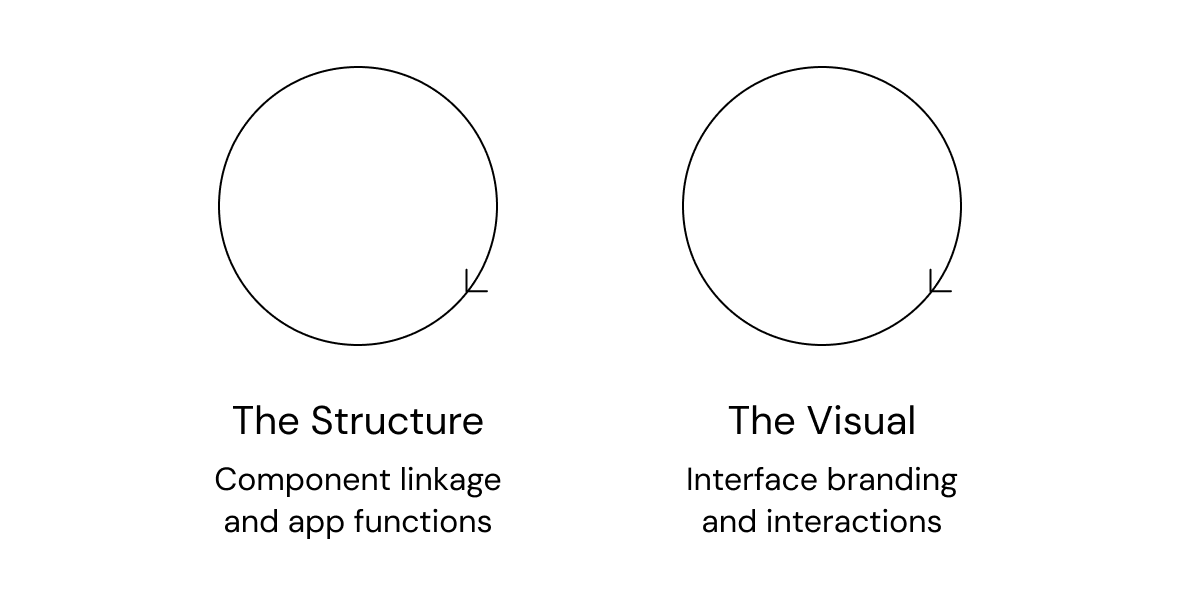

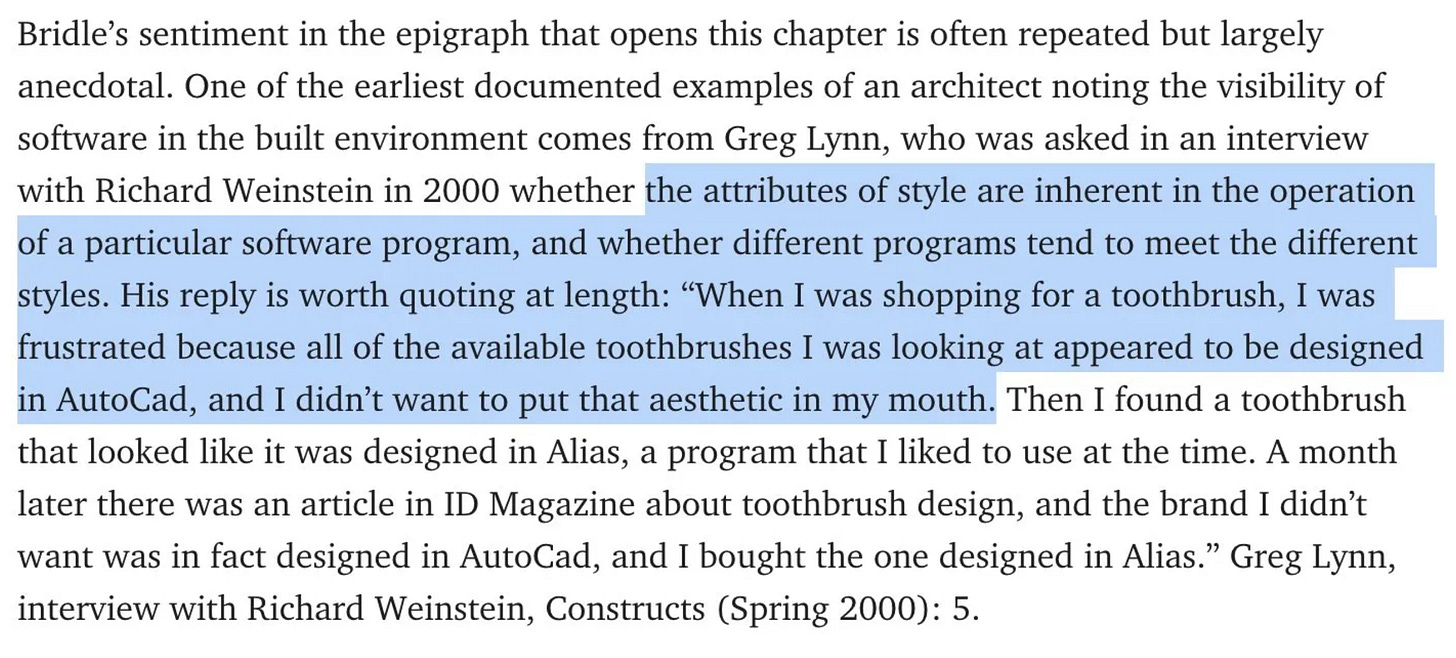

The tools used can be derived from the style - source

The tools used can be derived from the style - source

The downsides of ultimate flexibility

AI-powered tooling with chat as the sole input makes it difficult to scale complex software, but why? Because users are able to describe whatever they want, chat paradoxically sets users free from the limits of the tooling interface, yet limits them because of the same reason. Optimizing for chat does not free an interface from itself , and just as you can tell when something is made with Squarespace - you can tell when something was made with just AI prompting. Because there are no guardrails, the limit is the user’s own knowledge and so people fail in the same ways again and again.

That being said, I’m not arguing to go back entirely to the days of prototyping tools. What I appreciate about the AI-powered prototyping is the flexibility because it’s centered around a true primitive - the code itself. I believe we can leverage the benefits of AI to make beautiful software creation accessible while giving choice back 3. So how do we do it?

Have an opinion on the best way to create software and embed in those principles

Building is complex. Before AI, oftentimes to raise the ceiling, you had to raise the floor. Tooling would have a high learning curve that, when unlocked, would have incredible benefits. However conversely a lack of any opinion at all shapes an outcome - which is not only poorly designed software but also someone who grows frustrated that the house they have created is fallible.

While users familiar with code have a great time with prompting, those do not will fall into holes of their digging

While users familiar with code have a great time with prompting, those do not will fall into holes of their digging

It is possible to have extremely flexible software but still with strong principles. For example, Figma is an abstraction layer on top of a blank canvas - but its many principles like frames, components, and autolayout go beyond interface design to slide and graphic design as well.

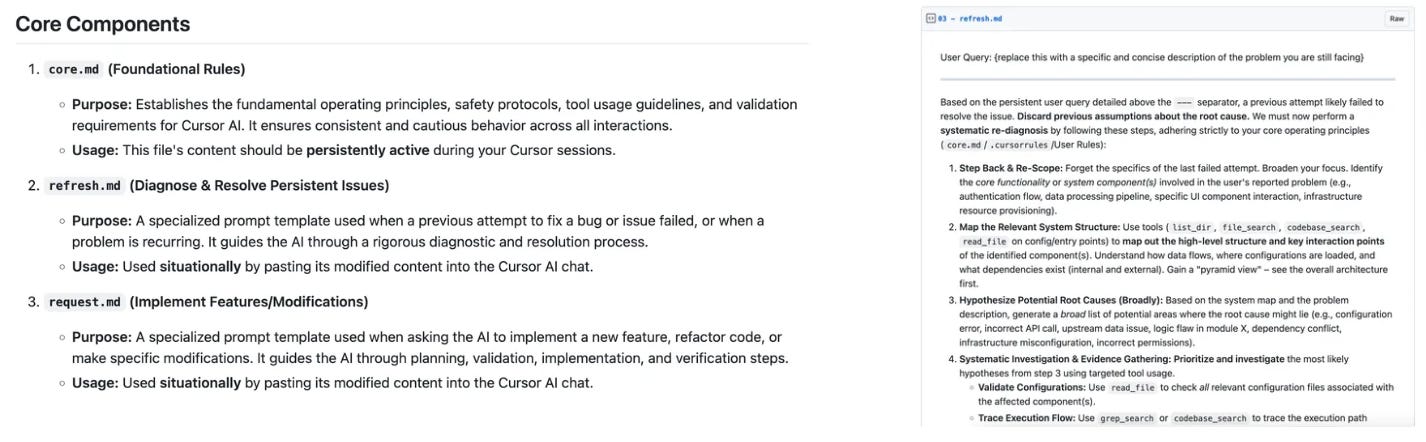

An easy solution if we were to continue entirely on a chat-based paradigm is to instill better defaults in system prompts to ensure specificity and reduce error, prompting the user if they have not provided enough detail.

This popular cursor rules file has specific defaults to implement software testing principles into the code

This popular cursor rules file has specific defaults to implement software testing principles into the code

But, is there still an interaction paradigm beyond chat that is malleable but principled?

Process-derived principles

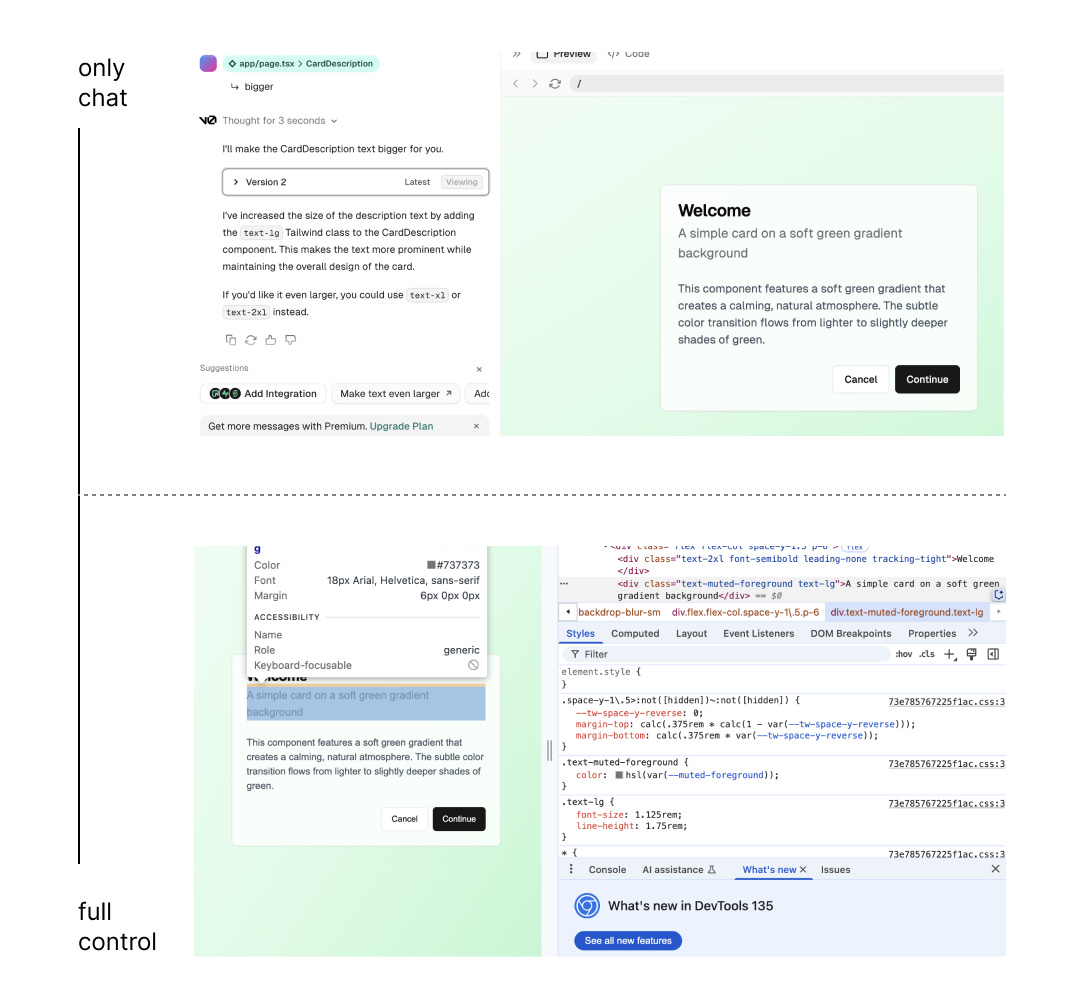

The current process for vibe coding with AI for nontechnical users (think products like v0, Lovable etc) optimizes for the below, because it emphasizes so much on chat as an abstraction and completely obfuscates the code.

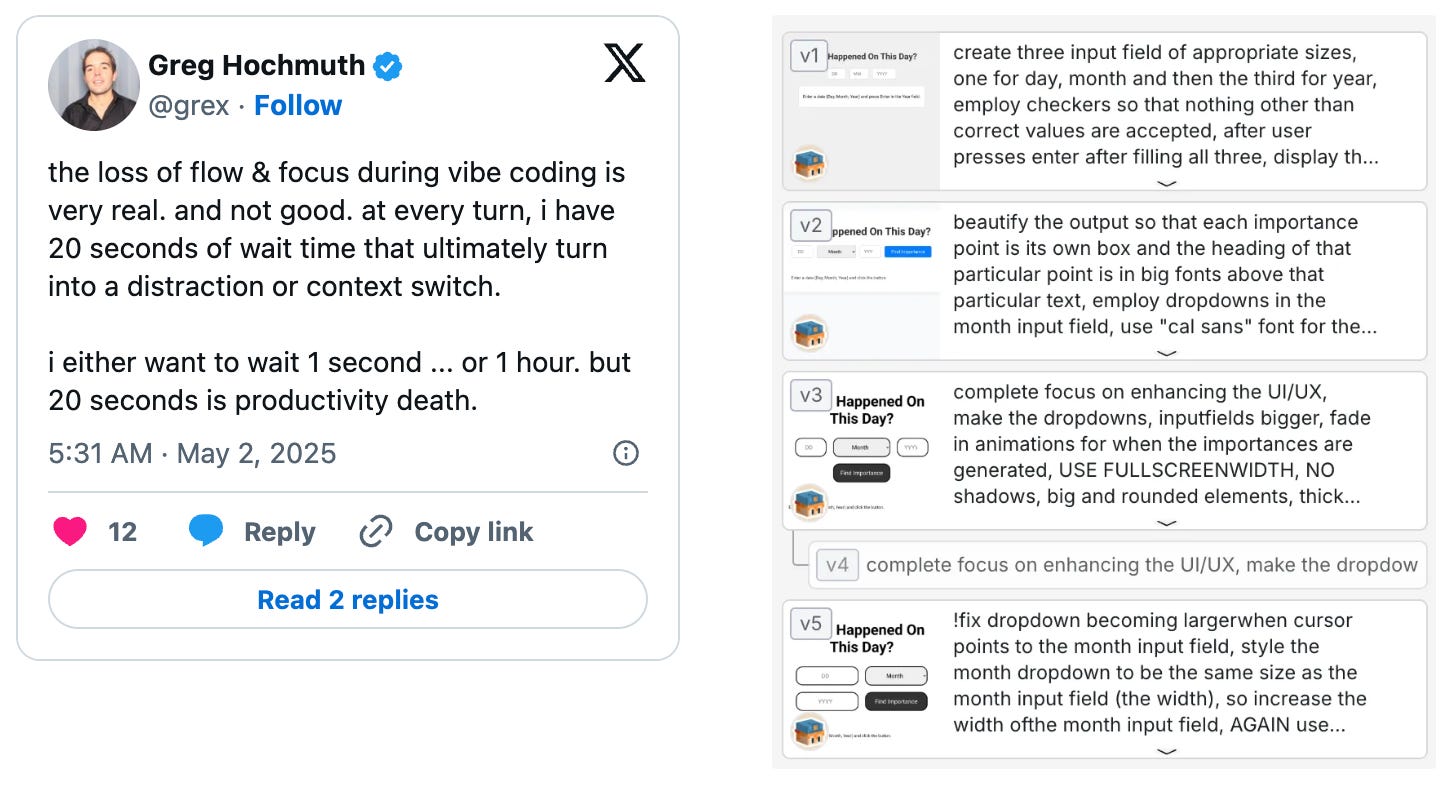

As a result, people rely solely through chat to iterate which can lead to problems like breaking the flow state waiting for things to load, relying on chat for inherently visual iterative processes, and forgetting what had already occurred.

right: losing flow state left: websim is mostly chatbased

right: losing flow state left: websim is mostly chatbased

From my own experiences creating personal software and talking with friends the generative process of making software that consists of two iteration cycles, in which it’s best practice to iterate on each separately - first making sure the structure works before applying visual fixes. To that end - new interfaces should make it easy to iterate in these two cycles at different levels of abstraction.

Iterating with a ladder of abstraction

The term “ladder of abstraction” is inspired from this Bret Victor article

Right now, many gen-AI coding platforms pigeon the user into relying completely on the chat, to the point that users unfamiliar with code will never look at the codebase at all. As a result, all actions, regardless of complexity are given the same lever for control. For small changes like UI or variable iterations, this makes no sense, since it would be an easier feedback loop to edit the code directly.

Additionally, showing the user how to understand the “manual way” provides insight on how to read code and allows them more power to recognize and make the smaller decisions that allows craft and intent to shine, instead of entrusting everything to the AI.

How do we empower users to move up and down the ladder?

One problem with prior complex tools is that a specific task would be buried behind several dropdowns, making it hard to use. What if we leveraged this unique ability of AI to tailor the visual programming abstraction layers to the user? As a result, the user will learn gradually how to iterate without the use of AI for high frequency iterative tasks (changing the visual CSS, the layout etc).

This is a quick prototype without regard to visual design. View my UX work for a better portrayal of that quality bar.

Below I will describe the iteration interactions in relation to the structural and visual cycles.

This is a quick prototype without regard to visual design. View my UX work for a better portrayal of that quality bar.

Below I will describe the iteration interactions in relation to the structural and visual cycles.

Iterating functionally

When users first prompt the AI, they usually don’t write out all the functions that make up a program. Without the detail exposed, it can be hard for people to grasp hidden decisions AI has made behind the scenes. What if we could expose each layer and help users narrow in on functions they’re interested in iterating in? That way, functional changes are more pointed and incentivized to take into account the existing system.

The prototype above is for a simple javascript page, and there is much more to explore in terms of how to visualizing state-based and component-based systems.

The prototype above is for a simple javascript page, and there is much more to explore in terms of how to visualizing state-based and component-based systems.

Iterating visually

There are already many good intermediaries between pure CSS and the output - Chrome Devtools inspector allows you to modify properties to see changes lives and Figma’s primitives are mapped exactly to CSS (making it easy to create accurate code snippets without any AI). Although there could be many layers of abstraction for the different variables (and certainly better ways to interact with them), again we are illustrating the relation between the code and the output. This is very similar to the principles in Bret Victor’s Learnable programming (link here).

Implications for the future

All this to say, AI-assisted coding has changed my life. My foray into web development came from coding my portfolio from scratch every year and even though straightforward, adding more complex features like locked pages were insurmountable when I ran into random package bugs. I would comb over stackoverflow and github forums for hours until 2am to make no progress. Beyond resolving bugs, it also helped me understand motion libraries and connecting to a backend. However, there’s a difference between using it as an intermediary to understanding versus giving up on understanding entirely.

Through this in-between interface, there are several benefits - one is it bring back a true immediate feedback loop that helps you stay in the flow and also creates a more efficient experience for the AI. Instead of having to index the whole codebase, this allows users to point with greater specificity in what they’re building.

The second part is making computing more accessible to everyone leads to a democratization of ideas. To have good craft is to embrace the infinitude of decision-making when creating, and AI abstracts that out fully, making the decisions for the user when it hasn’t been specified in the directions, instead of having the user choose themself.

Making self-expressive software relies on understanding what you’re building and allowing as much personal decision making as possible. Pure chat is not a primitive for building a system - you need more ways to look at an object than just in one dimension.

if you're interested, this piece also exists on my substack

Side notes - this topic has been ruminating in my mind a bit, I had previously written my thoughts on genAI for images and how it lifted decisions from the creator - I wondered how those metaphors extended to AI-generated coding. Coding can be intimidating and not immediately intuitive, so I hope to see a future where AI can be used to progressively help the user play and understand with this medium. Note of thanks to Justin for sharing Bret Victor’s articles with me!

Footnotes

-

That being said - one could say who cares if it works? In the Crystal Goblet, a pivotal graphic design text - Beatrice Wade argues that the most beautiful wine glass is not the ornate one, but the transparent one - it is the one that allows the true intention of the design to shine - random UI bugs distract from the meaning of software. ↩

-

There is also the personal factor and visual that comes into designing UI that is very akin to graphic design, in which branding is hard to automate with AI as branding requires constant updating with the cultural fabric. To have communicative branding that expresses the meaning of the software is another exhibition of attention. ↩

-

This is similar to Bret Victor’s principle in this talk: https://jamesclear.com/great-speeches/inventing-on-principle-by-bret-victor#:~:text=That's my principle.,can't be anything hidden. that “Creators need an immediate connection to what they create” ↩